Discover more from Oxymoron

Last week I was invited to take part in a debate, provocatively titled ‘Your use of AI does more harm than good’ at the B Corp Festival in Oxford. It took place in the rather surreal historic venue of the Oxford Town Hall, built in 1897, contrasting starkly with the futuristic topic being debated. It was amazing to see how much interest there was around the ethics and risks of AI in the B Corp community, with a fully booked session and many people asking why this topic was not more central to the wider conference when it is one of the key ethical questions of our time that affects all businesses. I couldn’t agree more, so I’m sharing my part of the debate here for those of you who are interested.

The session was facilitated by Shankar Velupillai with speeches from Adam Wojsa, founder of the machine learning company Numlabs, Andrew Tilling, founder of The Hive Consultancy, and myself.

Adam made the case that technology is ethically neutral and that responsibility for its safety lies with the humans at every stage of its development and use. Andrew made a beautiful case for the need for a culture that safeguards against AI risks and for harnessing the collective intelligence of humanity as a counterbalance to artificial intelligence. And I made a pitch for us to use the rise of AI as a wake up call to reconnect with all of the things that make us special as humans and embrace our full humanness.

As you’d expect from the B Corp community, it was an extremely friendly debate with a real openness in the room to listen to different perspectives. So without further ado, here’s mine…

Is our use of AI doing more harm than good?

In this instance, I'm using the term AI to refer specifically to generative AI technologies like GPT, Claude, Gemini and Llama that are central to the current hype, but perhaps more importantly, I'm going to talk about the longer term goal of these technologies and where they are heading. This goal is the creation of Artificial General Intelligence (AGI), which is an AI that has similar intelligence to humans across a wide range of tasks, and Artificial Super Intelligence (ASI), which is an AI that is more intelligent than the most intelligent humans in all cognitive disciplines.

My company, Wholegrain Digital, specialises in creating online experiences that are truly good for humans and have a low environmental impact. As a B Corp, we also want to try and create a truly good place to work that really nurtures people and cares for them as human beings.

The rise of AI therefore brings us many new challenges. Most notably, it brings us the challenge of AI’s huge environmental impact, which far exceeds that of previous digital technologies. It also brings with it social risks such as increased amplification of social bias and the spread misinformation, not to mention the potential risks to employment. These risks alone are worth making us stop and think carefully about if and how we use AI technologies, but there's a much bigger question to consider that I’m going to focus on here.

What does AI mean for us as humans?

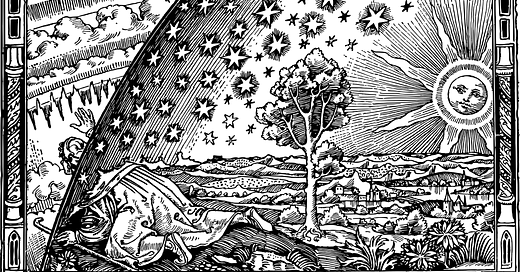

The philosopher Marshall McLuhan said that every new invention is not just an evolution in technology but an evolution of the human species. Every technology changes us into a different animal, but we only ever understand the change when we look in the rear view mirror. When we look forwards, we tend to assume that we’ll still be the same creatures but with new tools, not comprehending how we ourselves will be different.

For example, humans before the invention of the spear were different animals from humans after the invention of the spear. Humans before the invention of the printing press were different animals to humans after the invention of the printing press.

Long before AI, McLuhan was concerned about what he called the “electric media”, meaning radio, television, and towards the end of his life, computers, which he believed were accelerating the evolution of humanity far too fast for us to adapt to in a healthy way.

To give a couple of examples, the introduction of television enabled the mass dissemination of information and entertainment, but it also contributed to the homogenization of culture, to us living more sedentary lifestyles and all the associated health implications, as well as the breakdown of community, as people stopped gathering in their local social spaces and increasingly spent their free time sitting in their homes staring at a box.

To take another technology, GPS makes it super easy to find our way to places, but our use of it weakens our ability to understand the landscape in which we live. We stop asking other humans for directions and walk around towns looking down at our little glass tablets instead of making eye contact with other humans or looking up to observe the environment around us. We certainly never pay attention anymore to the sun or the stars for direction. If I ask you right now to point south, could you do it? Perhaps more profoundly, GPS puts us at the centre of the map, showing the world literally revolving around us and causing us to lose perspective of the bigger context in which we exist.

Every technology gives us a new power, but it also takes something away from us. With every new technology, we risk becoming a little less human, and this is never more true than with AI.

If we look ahead and assume that Artificial Super Intelligence will be achieved, and I admit that is a big assumption, we would no longer be the most intelligent creatures on Earth. Those lucky enough to have access to it would, in effect, have access to a brain more intelligent than their own. And if they had that, why would they use their own? And if we don't think for ourselves, what do we then become? What is the purpose of life? And what is the value of human life?

The truth is that we don’t even need Artificial Super Intelligence for this dilemma to come into effect, as the generative AI tools already available today are sufficiently powerful to tempt us into relying on them for things that we would previously have used our own minds for.

So I think we face a choice.

Do we let AI do the thinking and let our minds atrophy, in turn becoming zombies? And if so, zombies controlled by who or by what? Does AI become our false god?

Or do we tune back into our innate human abilities and proactively exercise those muscles, keeping AI carefully in it’s place simply as a tool to serve humanity?

I believe that those of us with access to AI should make a concerted effort to do the latter. That means strengthening our own emotional intelligence and empathy. It means exercising our imagination and true creativity, which I personally don’t believe that AI is capable of. It means developing our critical thinking and moral reasoning, strengthening our connection to nature and to each other, as well as tuning in to our intuition and embracing our spirituality.

I think we should be doing this as individual human beings in our own lives, but also in our families, communities and within our organisations. If we do this, I believe that we can find a healthy coexistence with AI, but this is not the default outcome. The default outcome is that we mindlessly adopt AI and lose ourselves in the process, leading to the end of humanness. No regulation can protect us against that.

So we must not use AI to be lazy, but must be proactive in working on ourselves become better humans in a world where it is easy not to.

As to the question of whether AI will do more harm than good, I think the choice is very much in our hands. While I might disagree with Adam on the inherent neutrality of technology, I do agree that we have freewill and don’t have to be passively swept away by the current. Paradoxically, while AI might seem to be dragging us into a less human future, it might just be the thing that wakes us up and prompts us to look deep within ourselves to ask what really makes us special and unique as human beings. If enough of us get that wake up call, then maybe, just maybe, it could actually be a catalyst for positive social change. We face a fork in the evolution of humanity and we must each decide which way we want to go.

If you’re interested in the topic of how to use AI responsibly within your organisation, then do check out Wholegrain’s guide to the ethical use of AI.

This is a super broad topic and my current perspective shared here is just that, one of many possible perspectives. I’d love to hear yours so post a comment below or on LinkedIn if you feel open to share.

Is it possible to read an article and agree with EVERY SINGLE point it makes? Apparently, it is.

Again, thanks for the wonderful article. As with other technologies, it might enable us to become more human, focusing, like you mention, on spirituality, community, critical thinking and I would add on practicing philosophy. I wish these practices were not only fostered in our private lives and communities, but also became an inherent part of institutions - within education as well as work etc.